Fragmented project knowledge

Specs, docs, and chat logs live in different tools. Teams waste hours syncing the truth before every iteration.

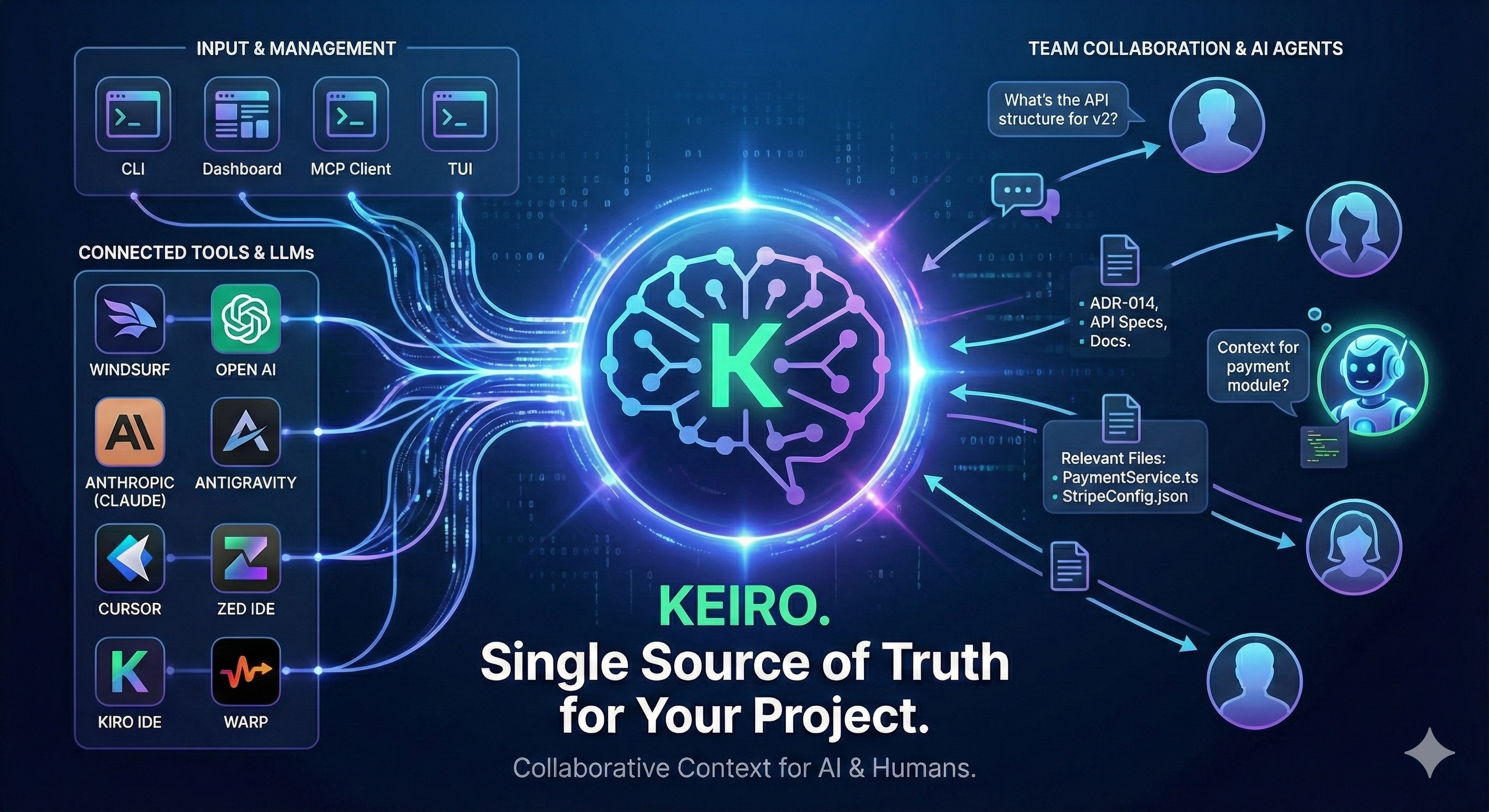

KEIRO is the shared memory layer—available via CLI, MCP server, REST API, and dashboard.

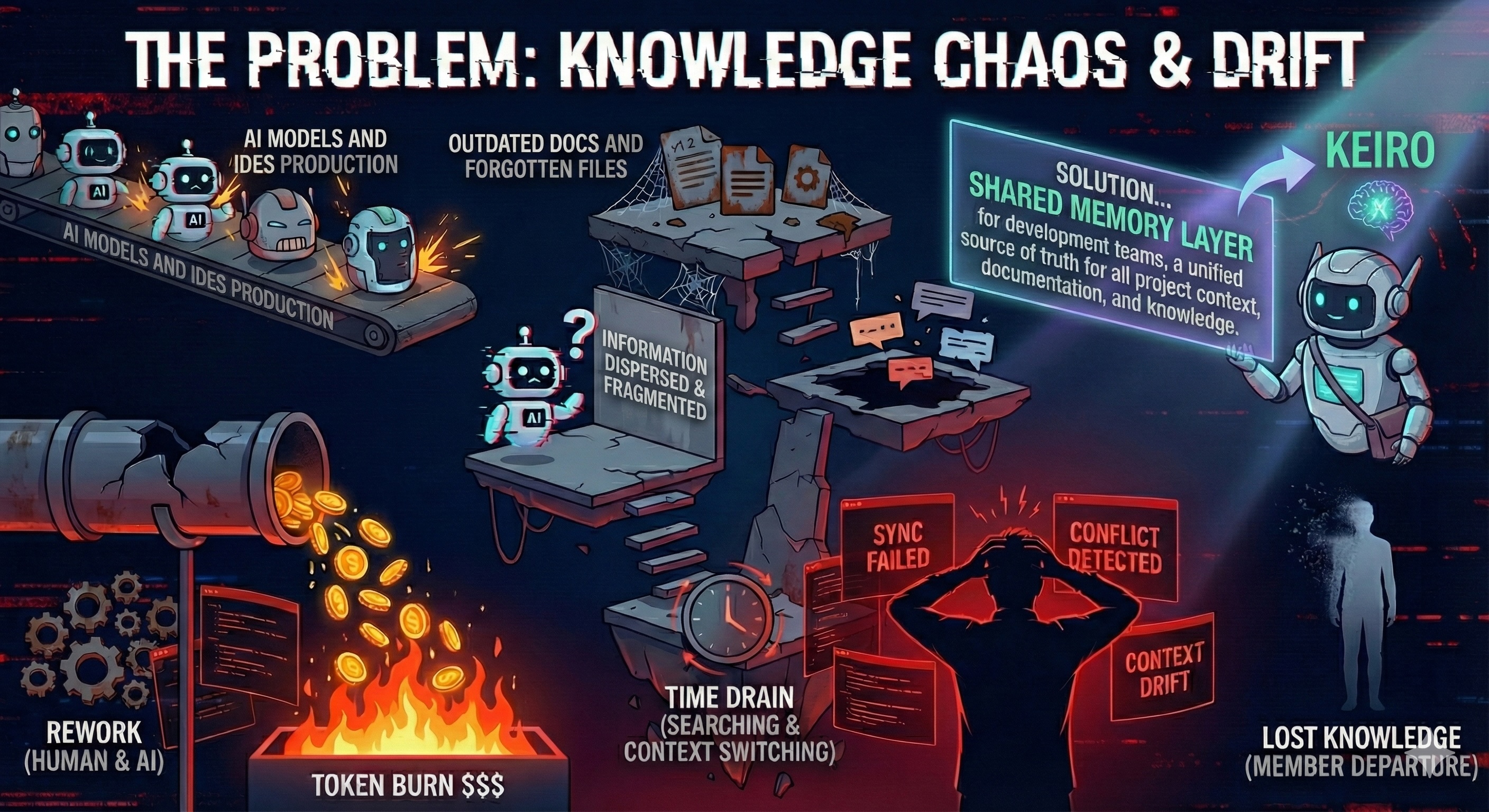

Problem

Multiple tools, scattered files, and isolated AI agents create drift. Without a single source of truth, teams struggle to stay aligned.

Specs, docs, and chat logs live in different tools. Teams waste hours syncing the truth before every iteration.

LLMs re-ingest the same knowledge on every call because there is no shared curated memory.

Knowledge diverges between IDEs, APIs, and dashboards, so changes go unnoticed and rework piles up.

Solution

A shared memory layer that organizes context into living nodes, keeps them current, and serves them everywhere. Git for context.

AI-powered agents structure knowledge into nodes that can evolve automatically as projects move forward, based on the documents and updates you send through CLI, MCP, or the dashboard.

Query or update knowledge from IDEs, MCP clients, CLIs, APIs, or the dashboard — everyone sees the same source.

Builders stay in flow. KEIRO keeps the latest state ready, so no one hunts through stale docs.

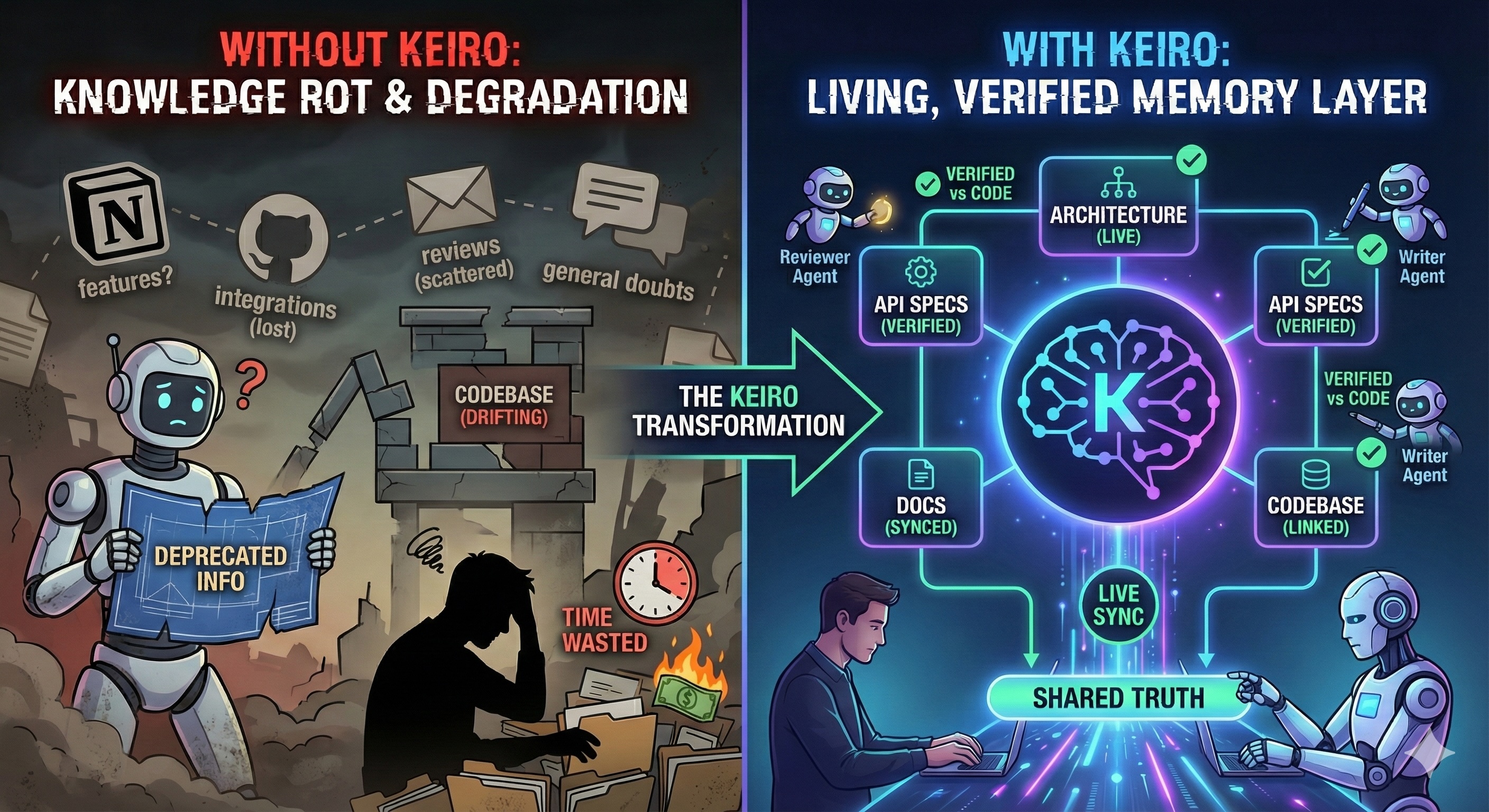

Without KEIRO

Fragmented knowledge, disconnected tools, and constant context switching—this is the reality without KEIRO.

Impact

KEIRO eliminates waste and accelerates delivery with intelligent context management.

LLMs can reuse curated context instead of re-ingesting raw files on every call.

Pre-indexed knowledge nodes with embeddings ready for instant search from CLI, MCP, and dashboard.

A single source of truth keeps documentation and project knowledge more aligned across tools and teammates.

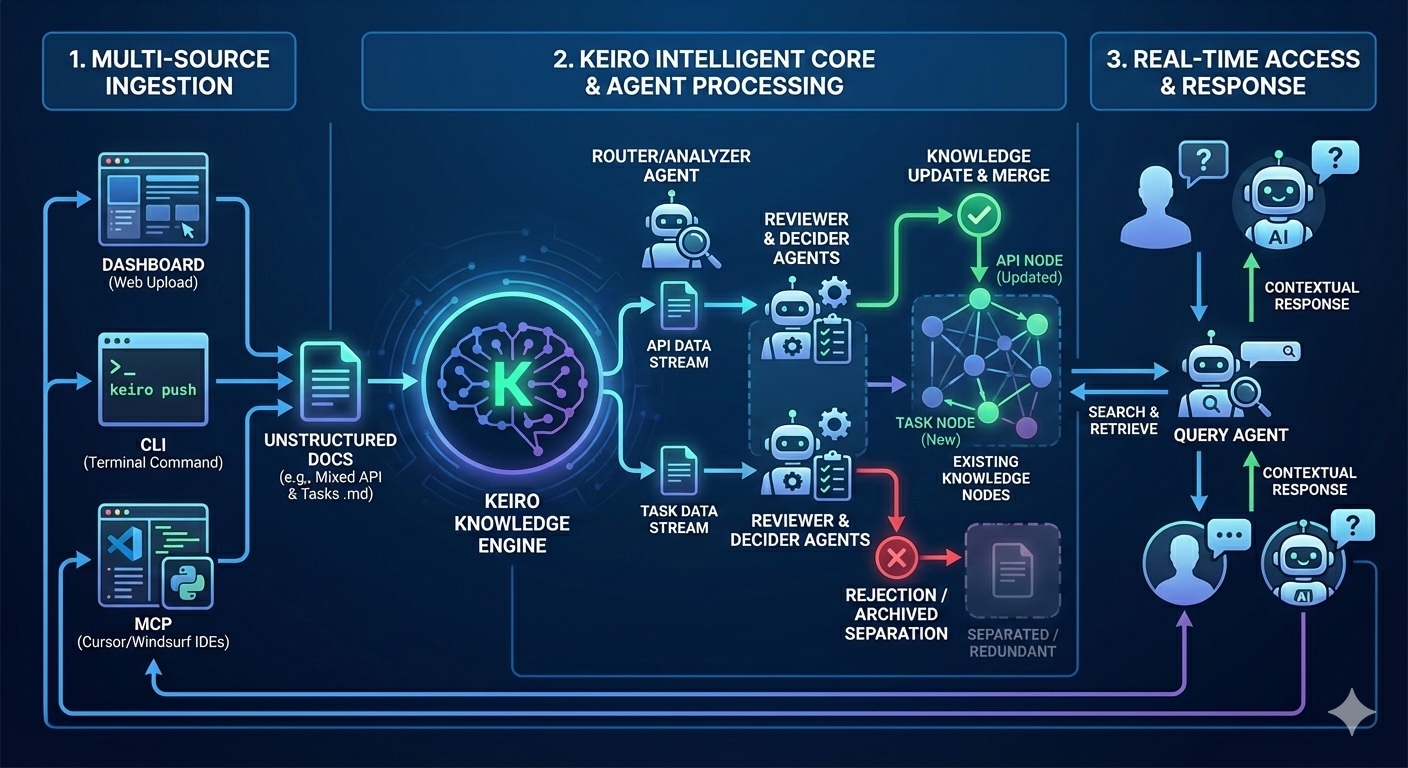

Visual Flow

The complete workflow showing how knowledge flows through the shared memory layer

Developers send docs and project artifacts into KEIRO through the CLI, MCP server, or dashboard. Content is normalized into knowledge nodes with semantic summaries and versioning. (Roadmap: automatic ingestion from repos, tickets, and PRs.)

Embeddings, hybrid search, and routing metadata stay synced so LLM calls reference trusted context.

Teams query KEIRO from any environment, keeping IDE companions, bots, and humans aligned instantly.

FAQ & Glossary

Get answers to common questions and learn technical terms. Explore by category to find what you need.

Still have questions?

Contact our support teamReady for aligned delivery

KEIRO eliminates drift, reduces token spend, and gives developers a shared memory layer they can trust.

Stay in the loop

Get first access to KEIRO updates, hybrid search rollout, and YC release notes.